We begin evaluations by finding out the purpose of the research. Typically this falls into one or more of these categories:

Influencing policy: we identify what we need to prove in order to convince policy-making audiences and the data we need to collect that will serve as reliable evidence

Continuous learning: we identify outcomes which indicate the extent to which the programme is working and which provide insight into where improvements might need to be made once the programme is underway

Evaluation reports: we create a research plan that enables us to create a baseline report, interim report and end-of-programme report which demonstrate the difference made and the reasons driving the impact

Outcome Discovery is a process we use to determine the expected and unexpected outcomes that your programmes achieve. This involves looking internally - engaging with staff and volunteers - as well as externally by listening to beneficiaries to understand the impact from their perspective. We look for existing evidence of the long-term outcomes of your work and standardised outcome frameworks already used within your sector, e.g. The Outcome Frameworks and Shared Measures database created by The National Lottery Community Fund.

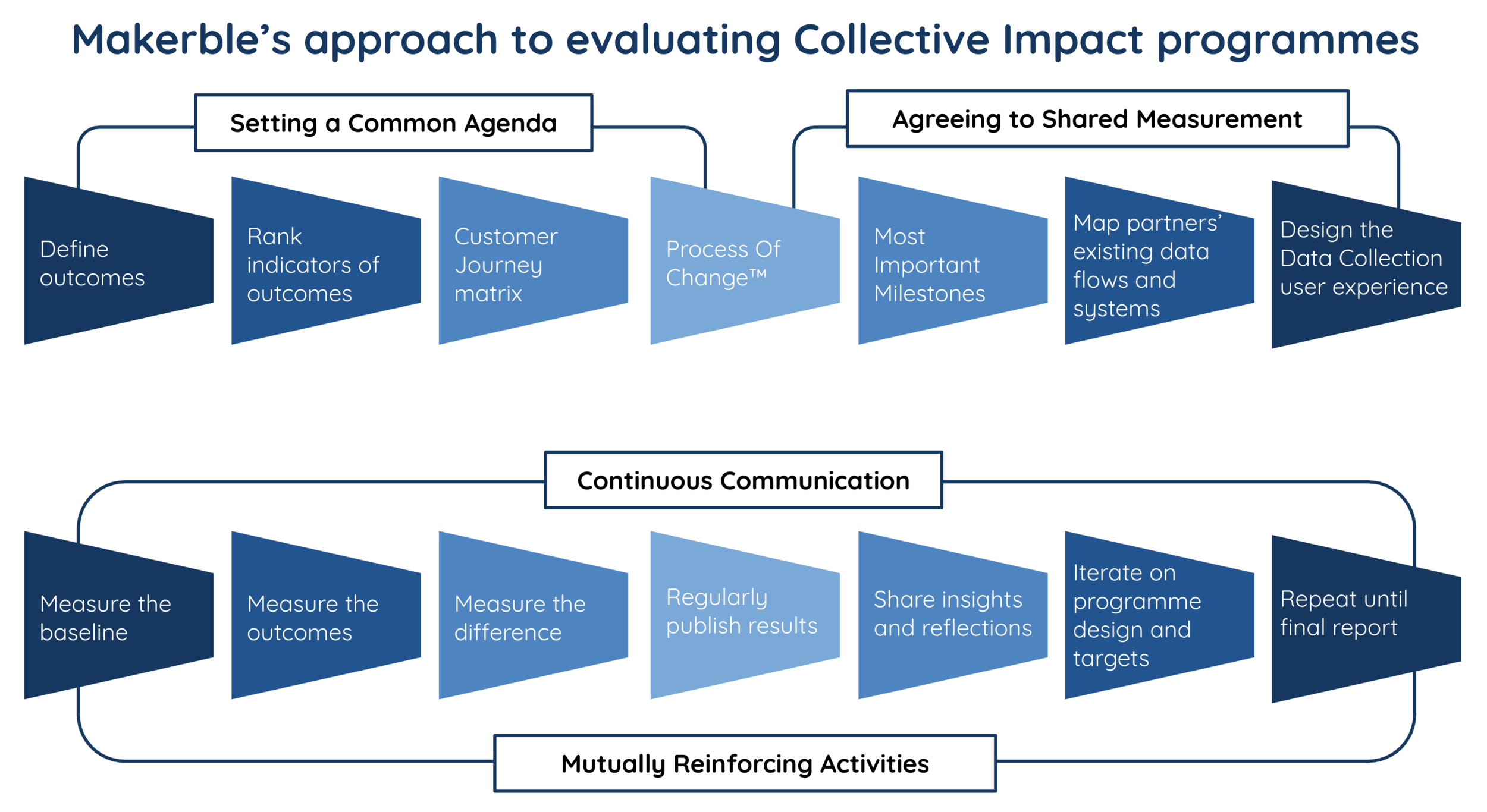

The Process Of Change is one of the tools we use to make sense of the complex set of outcomes that often arise during the Outcome Discovery phase. By grouping outcomes into one of three types of change, we can accelerate agreement around the language we use to describe the difference your programmes make. We group outcomes into:

Changes in how people Think & Feel: i.e. outcomes related knowledge, attitudes, beliefs and internal capacity

Changes in what people Do: i.e. outcomes related to behaviour, habits, achievements and corporate or government policies

Changes in what people Have: i.e. outcomes related to wellbeing, quality of relationships, health and wealth

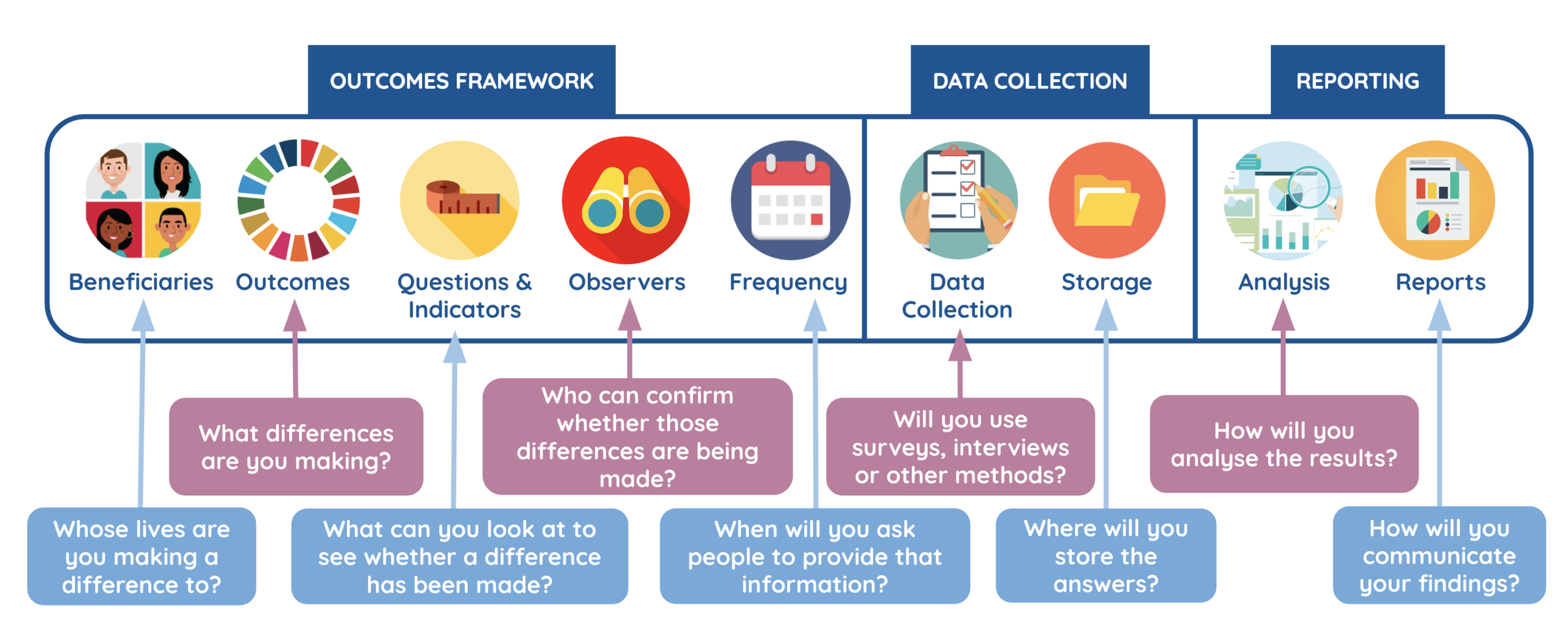

Data Collection: With the set of outcomes confirmed, we drill down to a practical set of indicators which can be woven into existing data collection processes wherever possible and supplemented by additional data collection when needed.

This culminates in the creation of the baseline, interim and final evaluation reports required.

Continuous Learning: In addition to the summative evaluation, we use workshops and digital tools to make the evaluation formative so that your delivery teams have the opportunity to reflect on the learnings surfaced by the evaluation at regular intervals.

To find out more about our approach to evaluation including Makerble Learning Boards, contact Matt Kepple who leads our evaluation practice. Email: [email protected]. Phone: +44 (0) 7950 421 815.