Find out why relying solely on anecdotes and gut instincts can be tempting but ultimately insufficient when it comes to measuring your impact.

Exploring Brand Impact: A Comprehensive Three-tiered Assessment

Measuring the impact of branding involves dissecting its influence across three crucial levels, providing a deeper understanding of its effects on consumer perceptions, behaviors, and business outcomes.

Firstly, there's the realm of how individuals perceive a brand. It's all about brand recall—what sticks in someone's mind when they think about a brand. This before-and-after comparison allows us to gauge how branding changes have altered consumer perceptions, reflecting the effectiveness of the rebranding strategy.

Moving beyond perception, the second level delves into behavioral changes among stakeholders. Internally, it could mean shifts in staff behaviors and company culture. Externally, it's about observing changes in consumer habits or increased engagement from other stakeholders. Benchmarking these behaviors before and after the rebrand provides tangible evidence of the branding's impact on actions and engagements over time.

Lastly, there's the tangible business impact. This involves assessing direct effects on revenue, market expansion, or reaching new customer segments. Although attributing all success solely to branding can be complex, observing increased revenue streams or market penetration post-rebrand indicates the branding's significant business impact.

Understanding the attribution of success in branding impact is essential. While it's challenging to credit all changes solely to branding, utilizing this three-tiered approach allows us to showcase the role of branding in these transformations.

When discussing potential rebranding projects with clients, employing these three levels offers a comprehensive view of the potential impact. By illustrating the cognitive, behavioral, and business alterations brought about by successful branding endeavors, clients can envision the significant changes that a rebrand or brand refresh could bring to their business.

3 Reasons Why Juggling Several Systems Puts Your Nonprofit At Risk

What are outcomes and why are they useful?

5 Reasons Not-For-Profits Are Using Distance Travelled To Measure Their Impact

Administrative tools for Monitoring, Evaluation, Research and Learning

Readiness Indicators: the early warning signals that prevent programmes from failing

7 Questions Every Manager Needs To Ask When Planning Their Programme's Monitoring & Evaluation

The difference between Outputs, Outcomes and Impact

How to actually make more impact as a charity

Plan, do, review.

This is the dream of any good monitoring and evaluation process and the thing we all know, in theory, should happy regularly when delivering charity services. Especially when funds are tight, making services more streamlined and effective is good for everyone.

We all have good intentions around this but there are two key things that are quite often missing:

Good quality, reliable data

Easy to use reporting tools

Without these things, the review process becomes difficult and, in some cases, impossible.

So how can we actually close the loop and use what we know to improve charity services?

One way is to pay for a large scale evaluation project to take place, but what if that process could be simpler? What if decisions could be made more quickly, based on real time data that your team are motivated to collect.

Firstly, let’s talk about data.

There are two key things that make for excellent, quality data collection:

Easy to use data systems that make data capture a dream rather than a chore.

People who are motivated to collect data.

Without one of these two things, you will never have good data to work with. You can invest all the money in the world in a new system, but if your team aren’t motivated that system will quickly become defunct and full of unusable data or empty. You can also have the most willing team in the world, but without a good system in place, data collection can quickly become a chore. Let’s be honest, most people don’t work in the charity sector because they’re motivated by data collection, but it is a key part of the job, so making sure your systems are easy to use will help a lot. But a good system alone will never be enough to motivate everyone, but there is a secret tool that can help.

Easy to use reporting.

This is the key to not only reporting on your impact, but also encouraging people in their data collection too. Because when an individual can SEE the difference they are making, at a glance, data input becomes a lot more motivating. What if they could set their beneficaries, or clients, goals and then see how close they were to achieving them, in real time? They’re more likely to input that data in the first place.

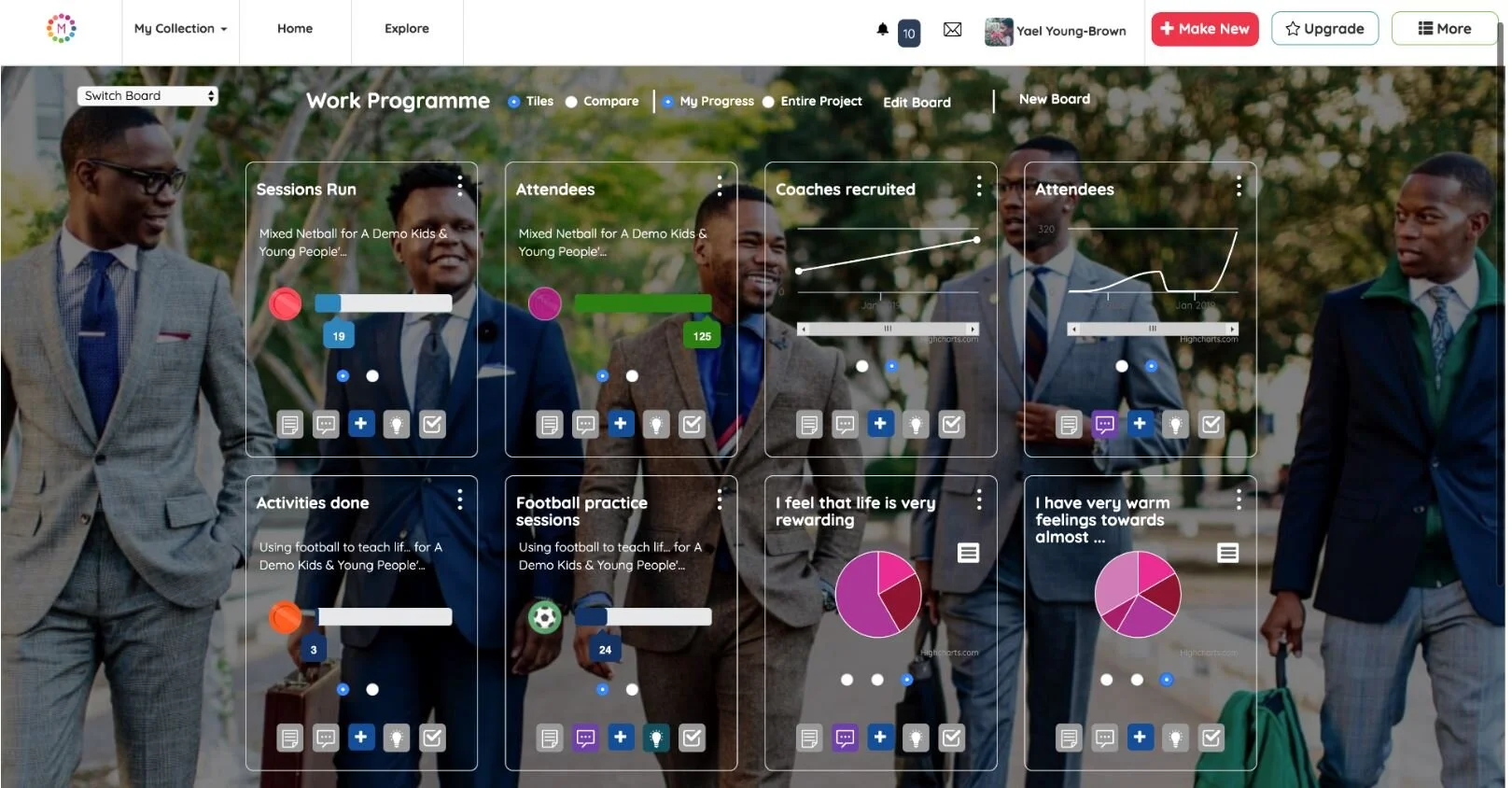

Tracking goals and capturing data is made easy with Makerble

Pretty charts and dashboards

Now the team are motivated to collect that data- how do you report on it? With traditional systems you may be used to downloading it all and analysing it in Excel. What if you could have dashboards that tracked all of your progress? You can look at data in real time and realise wow, “I didn’t realise we were helping that many people.” “It’s interesting that we’ve seen a drop off in attendance recently, I wonder why that may be” and so much more.

That’s why we’re here.

Our ethos is to help you Learn, Adpat and Repeat. We want data collection to feel easy, reporting like a breeze. Not just so you can report to your funders and stakeholders (although our customisable public dashboards means you can share your stats with them instanly-no, seriously) but so you can use your data to make changes, adapt your services and therefore have a greater impact.

We’d love to chat

We’re so passionate about this and we’d love to hear more about you and the current challenges you face around this. So, if you’re interested in hearing more, you can book in a chat with one of us today.

Databases don’t have to be scary…..

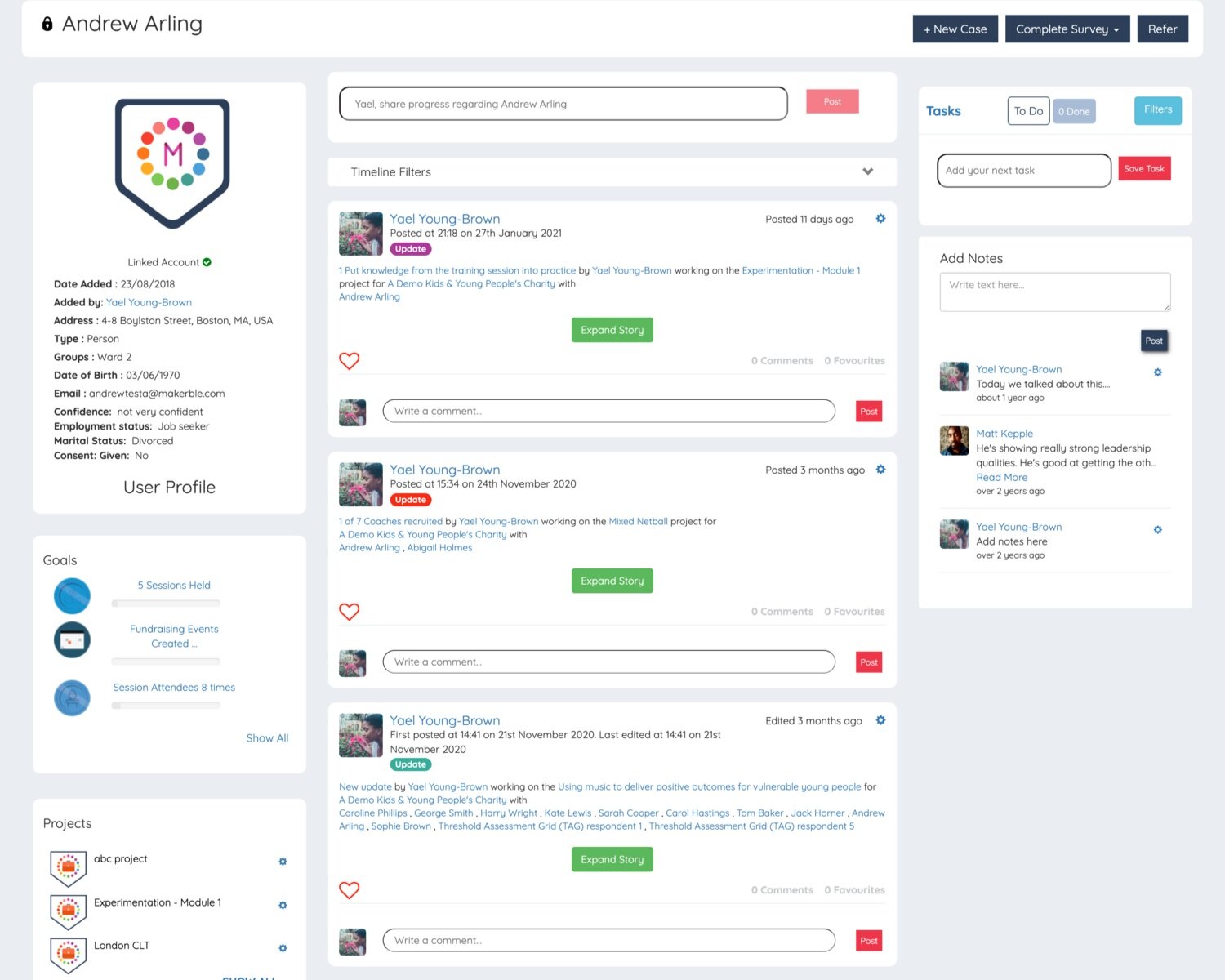

Evaluating a programme from the outset

We begin evaluations by finding out the purpose of the research. Typically this falls into one or more of these categories:

Influencing policy: we identify what we need to prove in order to convince policy-making audiences and the data we need to collect that will serve as reliable evidence

Continuous learning: we identify outcomes which indicate the extent to which the programme is working and which provide insight into where improvements might need to be made once the programme is underway

Evaluation reports: we create a research plan that enables us to create a baseline report, interim report and end-of-programme report which demonstrate the difference made and the reasons driving the impact

Outcome Discovery is a process we use to determine the expected and unexpected outcomes that your programmes achieve. This involves looking internally - engaging with staff and volunteers - as well as externally by listening to beneficiaries to understand the impact from their perspective. We look for existing evidence of the long-term outcomes of your work and standardised outcome frameworks already used within your sector, e.g. The Outcome Frameworks and Shared Measures database created by The National Lottery Community Fund.

The Process Of Change is one of the tools we use to make sense of the complex set of outcomes that often arise during the Outcome Discovery phase. By grouping outcomes into one of three types of change, we can accelerate agreement around the language we use to describe the difference your programmes make. We group outcomes into:

Changes in how people Think & Feel: i.e. outcomes related knowledge, attitudes, beliefs and internal capacity

Changes in what people Do: i.e. outcomes related to behaviour, habits, achievements and corporate or government policies

Changes in what people Have: i.e. outcomes related to wellbeing, quality of relationships, health and wealth

Data Collection: With the set of outcomes confirmed, we drill down to a practical set of indicators which can be woven into existing data collection processes wherever possible and supplemented by additional data collection when needed.

This culminates in the creation of the baseline, interim and final evaluation reports required.

Continuous Learning: In addition to the summative evaluation, we use workshops and digital tools to make the evaluation formative so that your delivery teams have the opportunity to reflect on the learnings surfaced by the evaluation at regular intervals.

To find out more about our approach to evaluation including Makerble Learning Boards, contact Matt Kepple who leads our evaluation practice. Email: [email protected]. Phone: +44 (0) 7950 421 815.

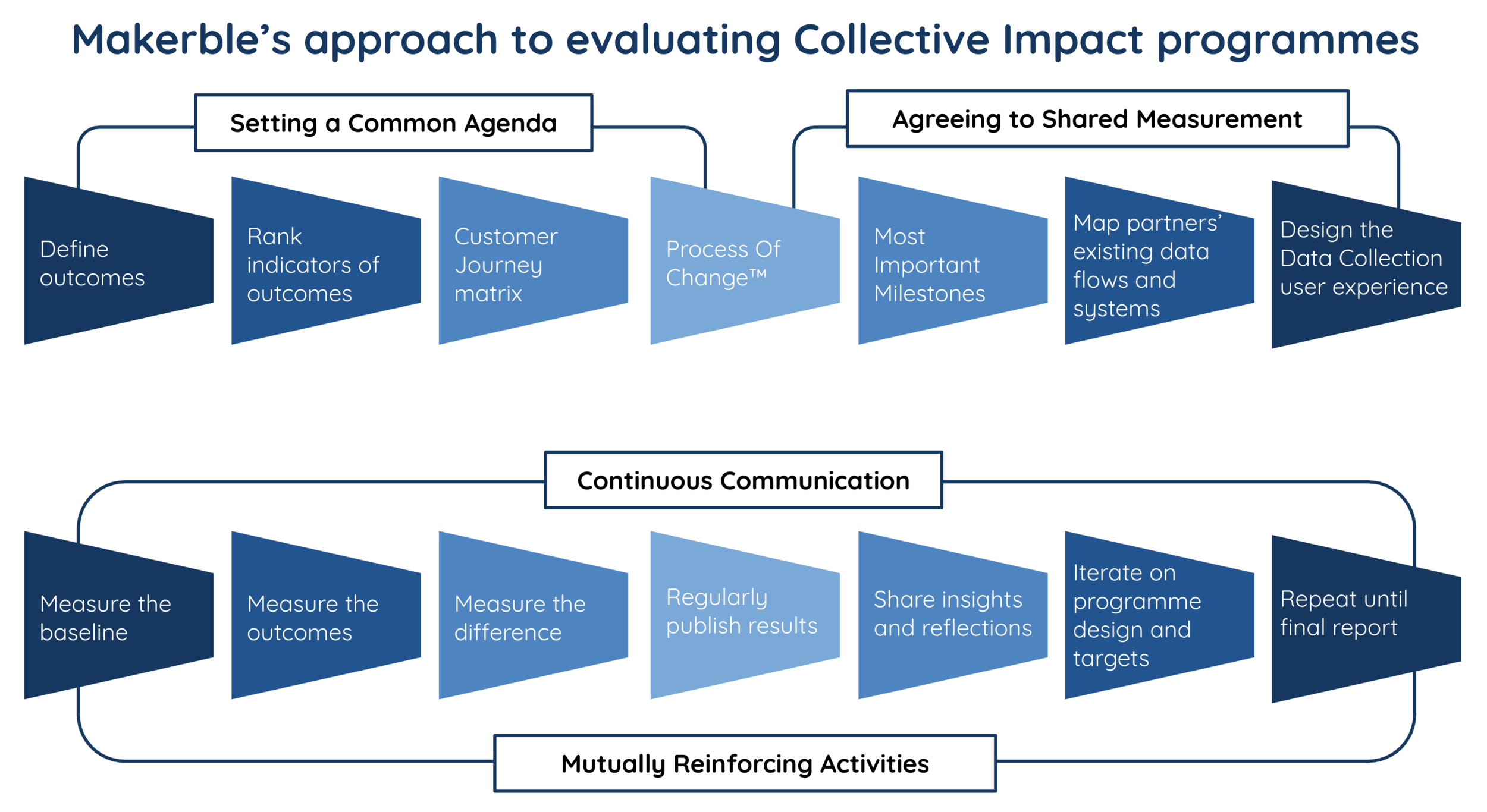

Evaluating a Collective Impact programme

Collective Impact programmes present unique challenges for evaluations due to the often complex arrangement of partners who are each producing data that could inform an evaluation. To get around this, we take a collaborative approach to planning the impact methodology which intentionally draws upon the insight and expertise of the respective partners. Our approach is anchored to the Five Pillars of Collective Impact. The fifth pillar, a strong backbone organisation, is typically the ‘lead’ organisation within the partnership which commissions Makerble as the evaluation partner in the first place.

Setting a common agenda is an essential first step to evaluating a Collective Impact programme. We use our proprietary Process Of Change™ framework in workshops with partners to enable everyone to agree on shared language that describes the outcomes being achieved at each stage of a person’s journey through your programme. This typically involves mapping out the ‘customer journey’ that a service user, participant or beneficiary might take through your programme.

Agreeing to Shared Measurement requires partners to distil a pragmatic set of indicators which will be reported across the programme. These shared measures open the door to continuous improvement as partners are able to see their collective results and have open dialogue about factors which are acting as enablers and inhibitors to people’s progress through the programme. Part of the role Makerble plays when evaluating collective impact programmes is in providing the dashboards which enable shared measurement to happen and facilitating the conversations which enable continuous reflection, learning and iteration to take place.

To find out more about how we can conduct the evaluation of your Collective Impact programme, contact Matt Kepple via email on [email protected] or over the phone on +44 (0) 7950 421 815.

To find out more about the tools and techniques we use, visit our Building Blocks of Measurement.

Collective Impact Client Stories:

Evaluating a funder's impact

Most funders approach impact reporting by communicating these three things:

Case studies about grantees

Amounts spent, split by cause and/or location

Number of grants made, split by cause and/or by location

Whilst this is a good place to start, it only tells part of your story. We help funders go further to measure the difference that your grants have made.

We recognise that as a funder you have three levels of impact:

Aggregate impact: the combined outcomes of the projects you make grants to

Grants Plus impact: the difference that your support makes to your grantees’ projects beyond the financial benefit, for example, increased profile or improved beneficiary recruitment

Strategic impact: the extent to which you are achieving your mission as defined in your Theory of Change

At each level of impact there is the opportunity to report on the outputs and outcomes of your work. That said, decisions about impact measurement need to be mindful of the capacity of grantees so as not to burden them with reporting requirements which are disproportionate to the funding they receive.

At Makerble we use our Impact Measurement Methodology to identify the minimal set of metrics you need to measure and devise practical ways to measure them.

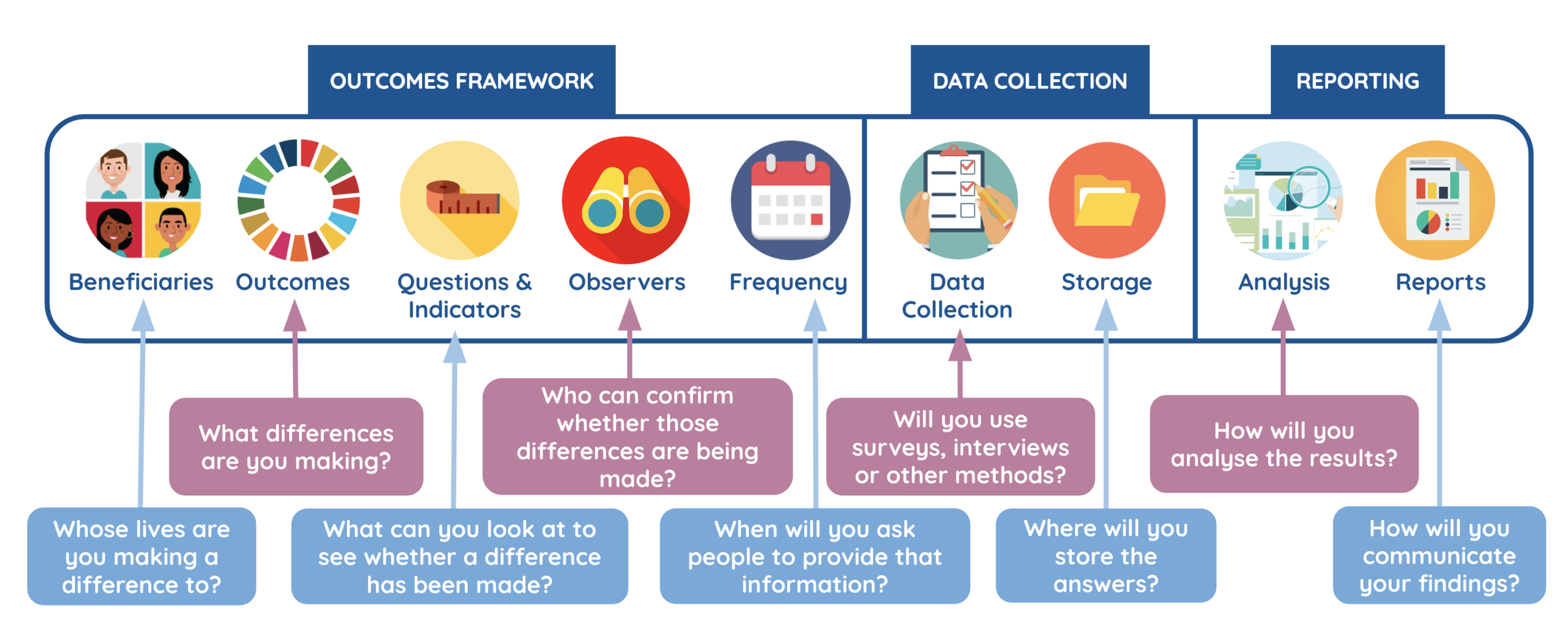

We can work with you across the full gamut of impact measurement or we can focus in on the one or two areas which are most important to you. Typically our work splits into these three phases.

Outcomes Framework: identifying the outcomes and metrics you need to measure

Data Collection: creating a data collection plan and either implementing it ourselves or supporting you to systemise it

Reporting: analysing your data, producing reports and giving you access to interactive dashboards that show you your data in real-time so you can use it to enhance decision-making

Contact us to talk through your current approach to impact measurement and explore how you can take your foundation’s impact measurement to the next level.